ChatGPT is a Hallucination

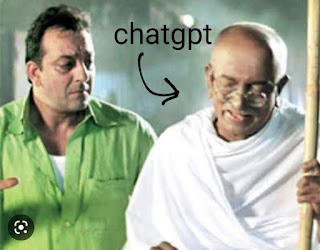

There's a Bollywood movie in which the protagonist studies the life of Mahatma Gandhi for weeks on end spending night after night in the library - till one day he starts talking with Mahatma Gandhi himself - well really just a hallucinated version of him.

At that point, his hallucination goes silent.

And through the movie, the hallucinated character helps the protagonist lead a life of Gandhigiri. He also helps him answer peculiar questions of Gandhi's life - which one would agree is a cool thing to have around. Except, one day the antagonist asks the protagonist to ask Gandhi a very specific question - a thing only he would know.

At that point, his hallucination goes silent.

To me, that's ChatGPT.

It's really helpful with things I already know about. It can write scripts which would otherwise take me an hour to write.

It's also great for normal chit-chat and asking it to be an unbiased judge of morals and values.

But for things that are very specific, like how to get Bazel to include the exported targets in the output jar, it fails.

Very much so like the hallucinated character from the movie.

Comments

Post a Comment